VMware announced vRealize Automation 8 (vRA8) a few months ago at VMworld. It is a nice step forward from the previous version, and if you are all in on VMware’s Software Defined Datacenter story, then it can add some real value into your environment.

But even customers who are heavily invested in VMware don’t exclusively use VMware within their private cloud. Most, if not all datacenters consist of heterogenous infrastructure. This being the case, how do you work with your f5 load balancers? Your Palo Alto firewalls? Your Datadog dashboards? What about certificates and secrets?

These examples (and more!) are all typical application requirements that cannot be solved natively with vRA. The good news is that we can fill these gaps with the Terraform provider ecosystem. In this post we are going to take a look at one example of this - how you can use Terraform to enhance the value of vRA8 and provide integration with f5 without any customisation of vRA at all.

How is this possible?

vRA8 uses YAML based Blueprints to handle the description of the infrastructure that you want to provision. These can be considered as a similar mechanism to Terraform code - that is to say that they provide an “as-code” model for interacting with the API surface of vRA8. VMware themselves are embracing Terraform in a number of ways - the vSphere provider, the NSX-T provider, and the vCloud Director provider. The final provider is the new vRA8 provider that VMware are building as a means for rich infrastructure-as-code interaction with the vRA8 API, as an alternative to the native vRA8 Blueprints.

The snippet below shows an example of the vra_machine object, and the Deployment we create to contain it. As you would expect, the Terraform provider maintains high fidelity in terms of the resource schema, including the ability to leverage constraints - the mechanism used by vRA8 to handle placement logic. This placement logic allows you to provide a level of intent when it comes to compute, networking and storage requirements for the given virtual machine. The full code for this example is in this repo, including a sample tfvars file (yes, the vRA token has been revoked before publishing).

resource "vra_deployment" "this" {

name = "${var.name_prefix}-${random_id.this.hex}"

description = ""

project_id = data.vra_project.this.id

}

resource "vra_machine" "this" {

count = length(var.ip_addresses)

name = "${var.name_prefix}-${count.index}"

description = var.machine_description

project_id = data.vra_project.this.id

image = var.image

flavor = var.flavor

deployment_id = vra_deployment.this.id

nics {

network_id = data.vra_network.this.id

addresses = [var.ip_addresses[count.index]]

}

constraints {

mandatory = true

expression = var.tags

}

}

To add the f5 objects, we use the f5 provider. I’ve only used a small subset of the f5 options here in order to explain the concept.

resource bigip_ltm_node "this" {

count = length(var.ip_addresses)

name = "/Common/${var.name_prefix}-${count.index}"

address = var.ip_addresses[count.index]

connection_limit = "0"

dynamic_ratio = "1"

monitor = "/Common/icmp"

description = ""

rate_limit = "disabled"

}

resource bigip_ltm_pool "this" {

name = "/Common/${var.name_prefix}-pool"

load_balancing_mode = "round-robin"

description = ""

monitors = ["/Common/https"]

allow_snat = "yes"

allow_nat = "yes"

}

resource bigip_ltm_pool_attachment "this" {

count = 3

pool = bigip_ltm_pool.this.name

node = "${bigip_ltm_node.this[count.index].name}:443"

}

resource bigip_ltm_virtual_server "this" {

name = "/Common/${var.virtual_server_name}"

destination = var.virtual_server_ip

description = var.virtual_server_description

port = var.virtual_server_port

pool = bigip_ltm_pool.this.id

profiles = ["/Common/serverssl"]

source_address_translation = "automap"

translate_address = "enabled"

translate_port = "enabled"

}

The net result? A picture paints a thousand words.

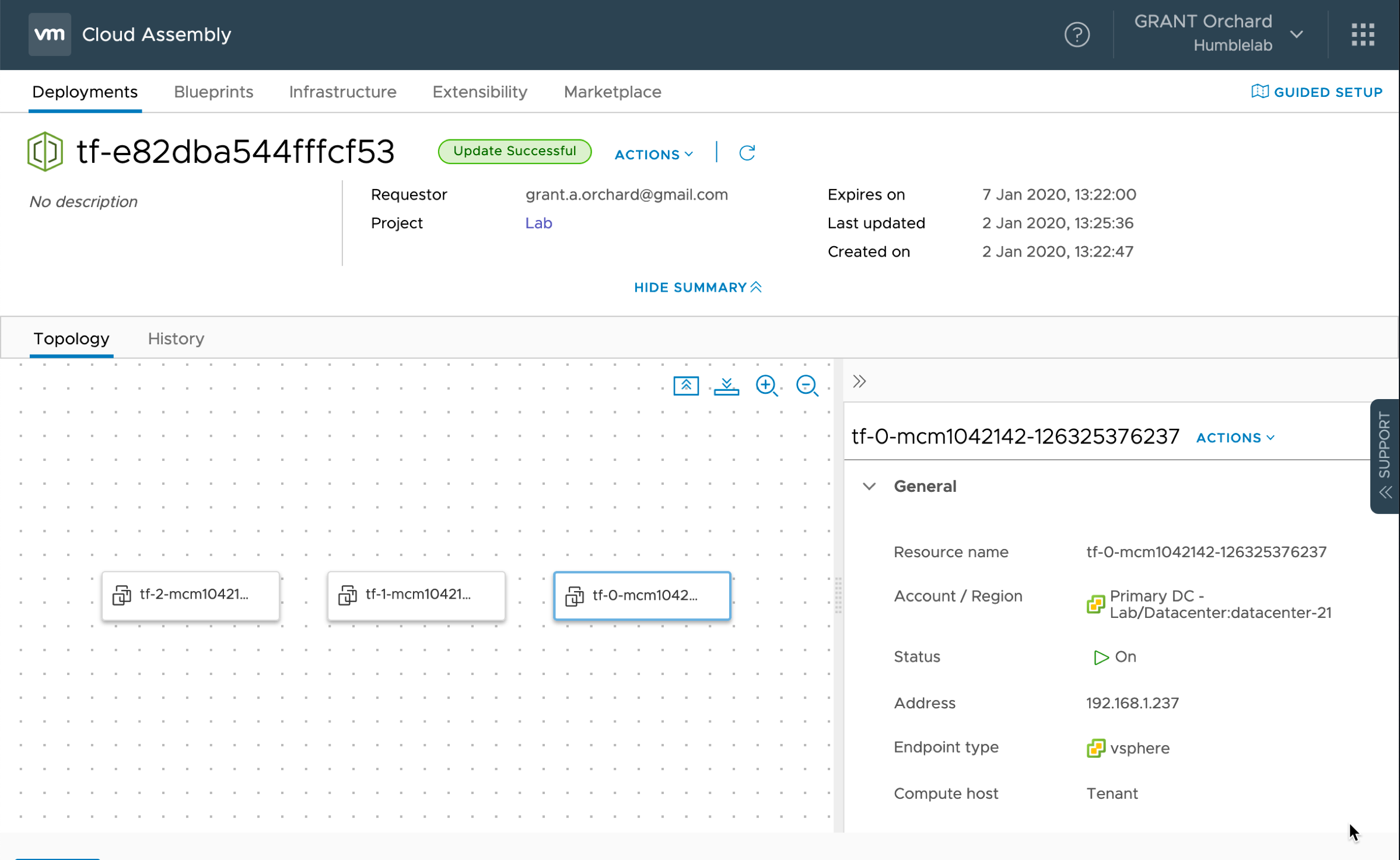

Our machines provisioned within vRA Deployment.

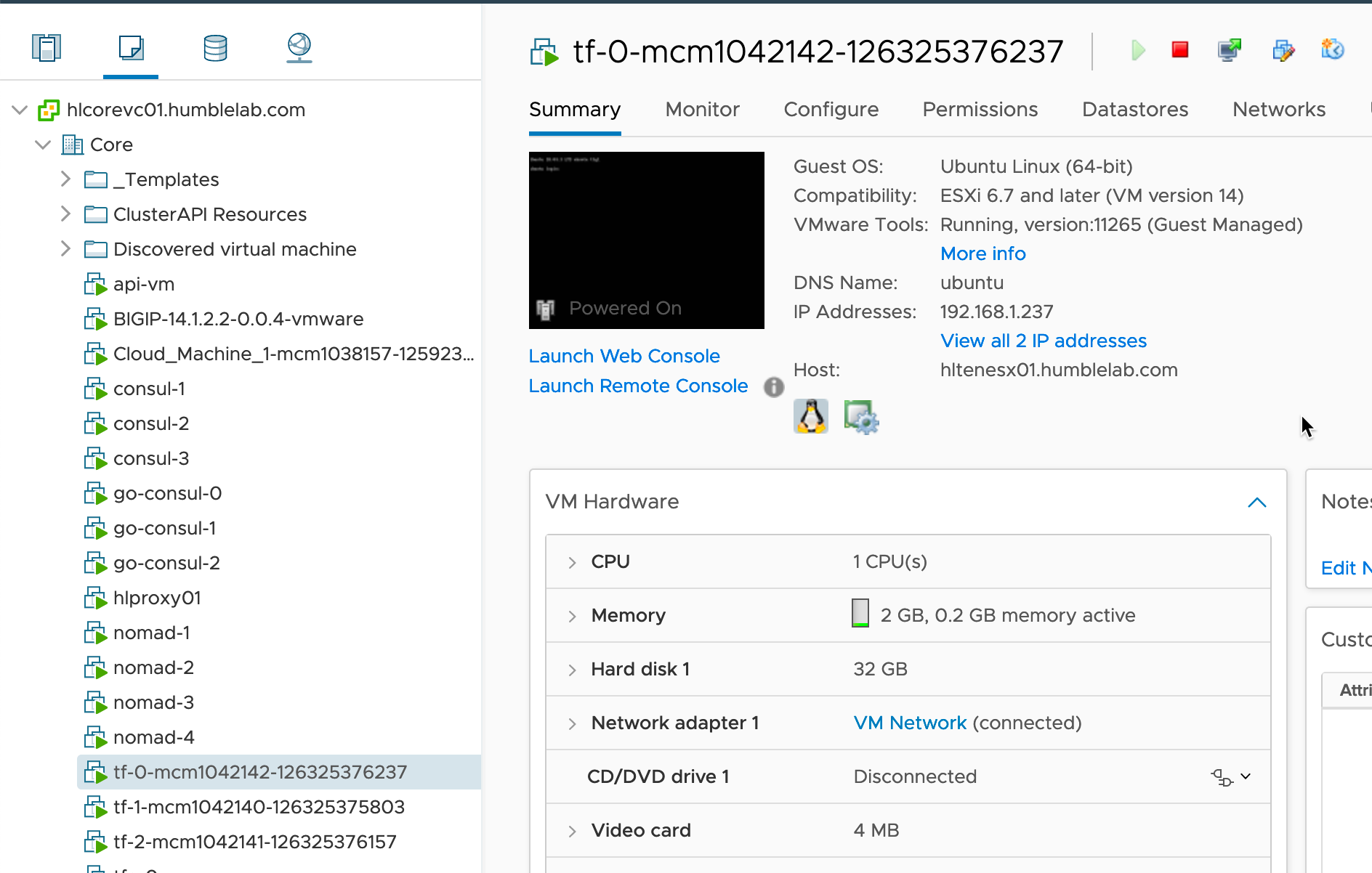

Those same machines present in vCenter.

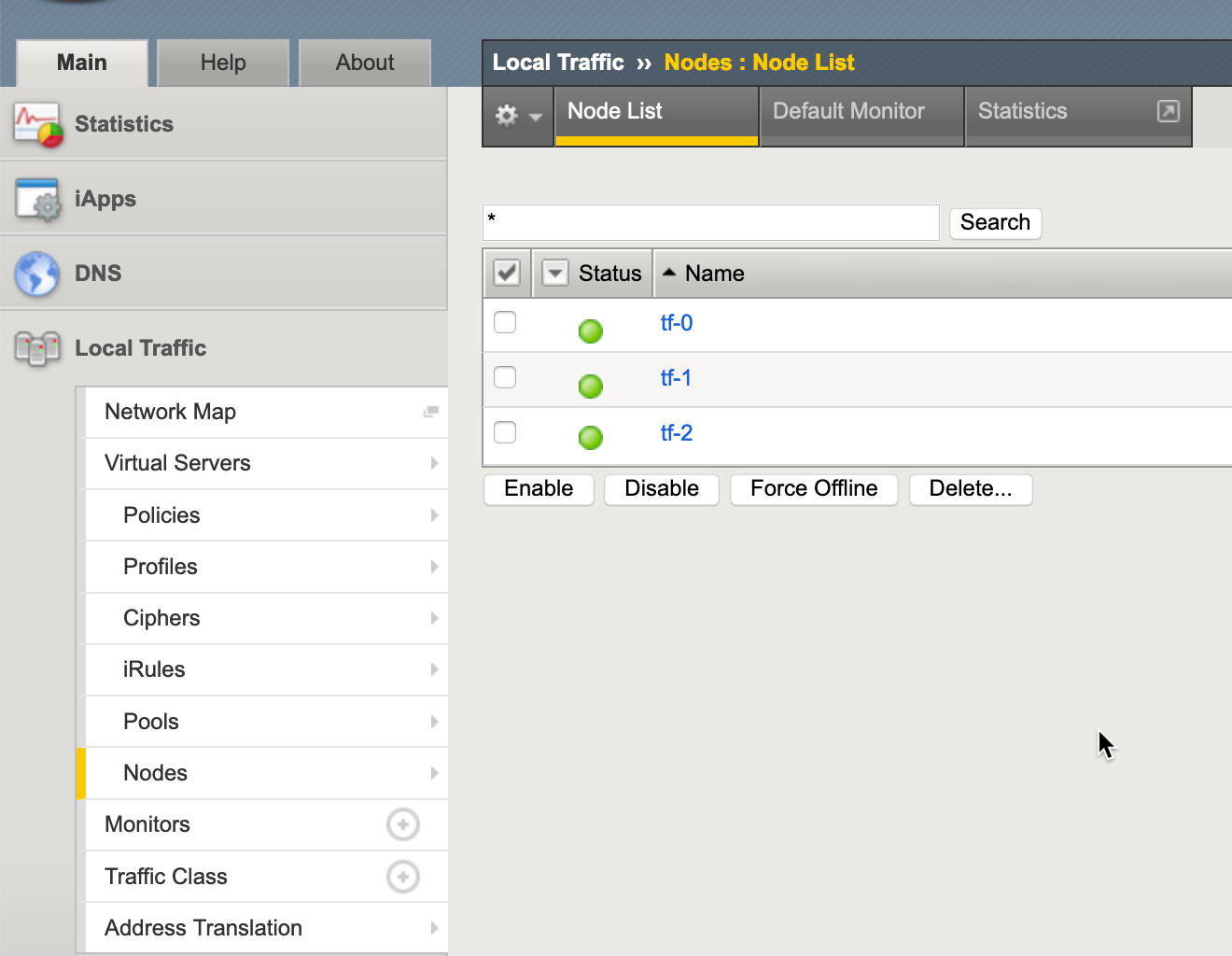

The associated f5 node objects.

Conclusion

As you can see, Terraform provides a very transparent and simple mechanism for you to extend beyond the VMware Software Defined Datacenter into the wide and varied set of infrastructure you have in your datacenter - no customisation or professional services required!